Which One To Choose NVIDIA Device Plugin or NVIDIA GPU Operator

- Written by:-

- Pradip Khuman

- Kubernetes

- No Comments

- the NVIDIA Device Plugin

- the NVIDIA GPU Operator.

1. NVIDIA Device Plugin for Kubernetes

- Primary Function: The NVIDIA Device Plugin enables scheduling GPUs in Kubernetes as a resource. This means that you can add in a pod manifest file to run a container that requires a GPU and the Kubernetes scheduler will ensure that the pod will be deployed on a node with an available GPU.

How It Helps Users

- Simplicity and Control: The NVIDIA Device Plugin has very straightforward installation and configuration. You can control the drivers, container runtime and everything else between you and the installation.

- Flexibility for Small-scale Use Cases: If you’re implementing a smaller cluster or testing out GPU workloads, the NVIDIA Device Plugin is most likely the best option as you’re attending to overhead simply to expose GPU resources.

- Minimalist Approach: The NVIDIA device plugin has no monitoring and it does not install the GPU drivers or runtime. If users already had a configuration in place for the features of these components, then the Avidity device plugin will leverage its feature set without any extra overhead.

Ideal For:

- Simple, non-production use cases with no needs for GPU management overhead.

- When using small clusters and GPU use is simple and less frequent.

- Certain users who are comfortable managing NVIDIA drivers and container runtimes manually.

How It Works

The NVIDIA device plugin is deployed as a DaemonSet, which means it is running on all nodes that have a GPU in the Kubernetes cluster. The plugin exposes nvidia.com/gpu resource and this is how Kubernetes would do pod scheduling based on GPU requirements.

Key Points:

- Basic : The plugin exposes GPU resources only and is for management of no GPU drivers or container runtimes.

- Manual setup: You will have to manually install NVIDIA drivers and nvidia-container-runtime on each node. If you do not do this, then, it’s probably safe to say the plugin will not work

- Minimal: It’s simple to deploy and doesn’t require much overhead, but it’s up to you to manage the drivers and runtime.

Setup Steps:

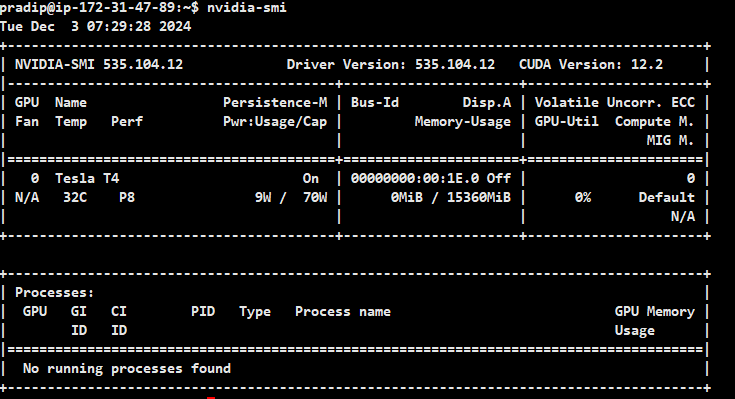

- Install NVIDIA drivers on the node and verify if drivers are installed successfully

- For more information follow official documentation

wget https://us.download.nvidia.com/XFree86/Linux-x86_64/550.135/NVIDIA-Linux-x86_64-550.135.run

chmod +x NVIDIA-Linux-x86_64-*.run

sudo ./NVIDIA-Linux-x86_64-*.run

nvidia-smi

2. Install nvidia-container-runtime on the node. For more information follow official documentation

Configure the repository of nvidia-container-runtime:

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

sudo apt-get install -y nvidia-container-toolkit

sudo nvidia-ctk runtime configure --runtime=containerd

sudo systemctl restart containerd

helm repo add nvdp https://nvidia.github.io/k8s-device-plugin

helm repo update

helm upgrade -i nvdp nvdp/nvidia-device-plugin --namespace nvidia-device-plugin --create-namespace --version 0.17.1

Bingo! We’ve successfully completed the setup of the NVIDIA Device Plugin.

You can now utilize GPU resources by specifying the appropriate resource limits in your pod manifest file.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda12.5.0

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

tolerations:

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

EOF

Crucial Consideration:

- Manual intervention: If you forget to install the NVIDIA drivers or the runtime, the device plugin will not work, which is a common mistake for beginners.

2. NVIDIA GPU Operator

The NVIDIA GPU Operator is a Kubernetes Operator designed to provide a comprehensive, automated solution for managing GPU resources in Kubernetes. It installs and manages not only the NVIDIA Device Plugin but also the NVIDIA GPU drivers, container runtimes and monitoring tools (such as DCGM). The Operator is designed for production environments where you need full lifecycle management for GPUs.

- Primary Function: The GPU Operator automates everything required for running GPU workloads in Kubernetes. This includes the installation of NVIDIA drivers, runtime, monitoring tools and the device plugin. It also ensures the system is always updated and that GPU resources are managed correctly.

How It Helps Users

- Automation: The GPU Operator automates the setup, configuration and maintenance of all GPU-related components in Kubernetes, removing much of the manual intervention needed with the Device Plugin.

- Full Lifecycle Management: The Operator continuously monitors the GPU components, ensuring they are running optimally and automatically handling updates and health checks. If an issue arises, the Operator can auto-repair it.

- Monitoring and Metrics: The GPU Operator also integrates monitoring tools like DCGM (Data Center GPU Manager), which collects important GPU metrics such as memory usage, GPU temperature and GPU load. This allows users to track and manage GPU health without needing to install and configure a separate monitoring system.

- Enhanced Scalability and Reliability: The GPU Operator’s self-healing properties make it a better choice for large-scale deployments, as it ensures that GPUs in a cluster remain functional and up-to-date without constant manual intervention.

Ideal For:

- Large-scale, production environments where GPU usage is extensive and critical to business operations.

- Clusters with multiple nodes that need to be managed and maintained at scale.

- Users who prefer an automated and self-healing solution, where GPU management is completely handled by the operator.

How It Works

The GPU Operator simplifies the entire process of GPU management:

- It automates the installation and updates of the NVIDIA drivers and runtime on every node.

- It manages the lifecycle of the NVIDIA Device Plugin.

- It deploys monitoring solutions, like the DCGM exporter for GPU metrics.

- It ensures that the GPU environment is always up-to-date and self-healing in case of failure.

The GPU Operator also uses Kubernetes CRDs to expose more information and manage GPU resources beyond just scheduling.

Key Points:

- Automatic setup: The GPU Operator installs everything for you, including the drivers, container runtime and monitoring tools.

- Full lifecycle management: It continuously monitors the health of the GPU components and updates them as necessary.

- Perfect for large clusters: Especially useful for clusters where multiple nodes are used, as it automates the process.

helm repo add nvidia https://helm.ngc.nvidia.com/nvidia && helm repo update

helm install --wait --generate-name -n gpu-operator --create-namespace nvidia/gpu-operator --version=v25.3.0

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda12.5.0

resources:

limits:

nvidia.com/gpu: 1 # requesting 1 GPU

tolerations:

- key: nvidia.com/gpu

operator: Exists

effect: NoSchedule

EOF

Crucial Consideration:

- More resources: Since the GPU Operator installs and manages more components, it requires more resources and overhead. For smaller, simpler environments, the Device Plugin might be a lighter choice.

Key Differences

Feature | NVIDIA Device Plugin | NVIDIA GPU Operator |

Setup | Manual (drivers, runtime, etc.) | Automated (drivers, runtime, plugin, monitoring) |

Lifecycle Management | No automated updates/management | Automatic updates, monitoring and self-healing |

Target Use Case | Smaller clusters or simpler GPU workloads | Large clusters, production-grade environments |

Components Managed | Only the device plugin | Device plugin, drivers, runtime, monitoring |

Configuration | Simple configuration | More complex but comprehensive setup |

Monitoring | Requires manual setup | Includes built-in monitoring (DCGM) |

Self-healing | No | Yes, automatically monitors and fixes issues |

Resource Overhead | Low | Higher (due to the added components) |

Key Benefits for Users

Feature | NVIDIA Device Plugin | NVIDIA GPU Operator |

Automated Driver & Runtime Management | No, drivers and runtime need to be manually installed and updated | Yes, the GPU Operator installs and manages GPU drivers, container runtimes automatically |

Installation Complexity | Simple, manual installation of the device plugin | More complex, but automated and comprehensive installation (via Helm) |

Component Management | Only exposes GPU resources to Kubernetes | Manages the entire GPU lifecycle, including drivers, runtime, device plugin and monitoring |

Monitoring Support | Needs to be set up separately | Built-in GPU metrics collection (DCGM) with monitoring capabilities |

Self-Healing & Maintenance | No built-in self-healing; manual intervention required | Yes, automatic health checks and recovery from failures |

Scalability | Works well for small to medium-sized clusters | Designed for large-scale, production-grade environments |

Use Case | Ideal for testing, small workloads or simple setups | Ideal for production clusters with heavy GPU workloads |

Resource Overhead | Low, only requires GPU resources and basic setup | Higher, due to additional components for monitoring, lifecycle management, etc. |

User Control | Gives users complete control over setup and configuration | Provides automation but offers less flexibility in manual configuration |

Conclusion: Which to Pick?

Use the NVIDIA Device Plugin if:

- You want something light and hand configuring drivers and runtime is not a problem.

- Your GPU workloads are rather small and straightforward or you are only experimenting with GPU features in Kubernetes.

- You are willing to take on troubleshooting by yourself.

Use the NVIDIA GPU Operator if:

- You plan on deploying large-scale, production-grade GPU workloads, then automated management is a necessity.

- You want your updates, monitoring and self-healing to be automatic.

- You need a complete solution that handles the whole GPU world, including drivers, runtime, plugins and monitoring.

I hope this blog helps you decide between the NVIDIA Device Plugin and the NVIDIA GPU Operator.