AWS Cloud Cost Optimization for an AI-Driven Pathology Company

Overview

Managing cloud expenses is a top priority nowadays for businesses leveraging the power of AI. Our client, a pioneering digital pathology company, faced challenges with soaring AWS costs due to the high computational demands of AI training and development. In order to deal with this over cost issue, our team partnered with the client identified inefficiencies, implemented cost-saving strategies and achieved significant financial savings without compromising performance or innovation.

Client Background

The client specializes in developing AI-driven solutions for pathology, such as diagnostic products powered by machine learning models. These models require extensive training datasets, high-performance computing (HPC), and constant experimentation—all of which contribute to substantial cloud expenses. The company’s AWS environment included:

- Multiple EC2 instances for AI training and inference.

- Large-scale storage on S3 for datasets.

- GPU-enabled resources for deep learning.

- Diverse services such as Lambda and CloudWatch for operational support.

Challenges

- Unpredictable Costs: Monthly AWS bills were inconsistent, making budget forecasting difficult.

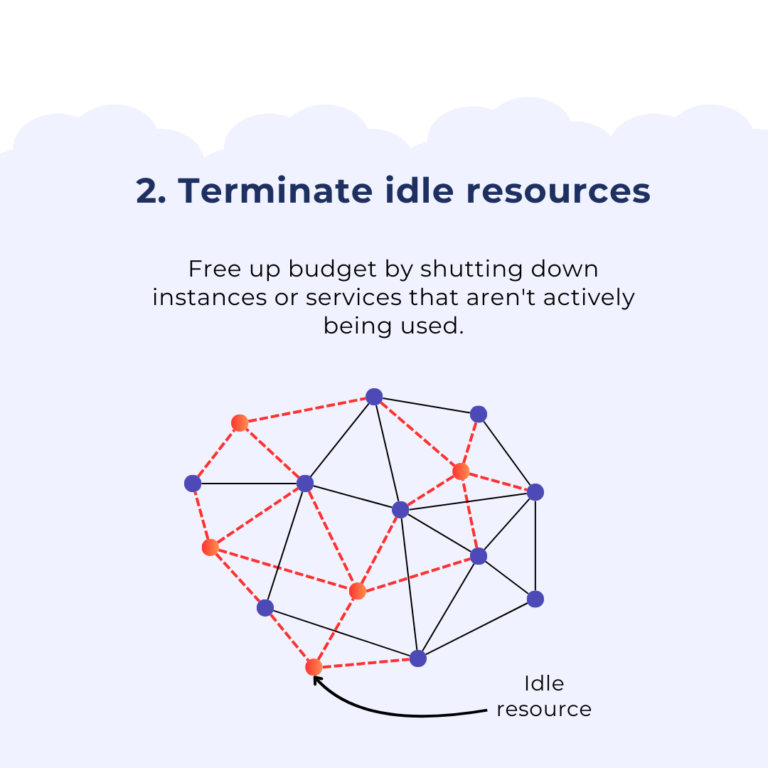

- Underutilized Resources: Many EC2 instances and storage buckets were underutilized or idle.

- Over-Provisioning: Resources were provisioned for peak usage, leading to waste during non-peak periods.

- Lack of Visibility: The firm lacked a comprehensive view of resource utilization and cost distribution.

Our Approach

We followed a structured approach to optimize the client’s AWS cloud costs:

Step 1: Assessment and Analysis

We conducted a detailed audit of the client’s AWS environment to identify inefficiencies and areas for cost savings. Tools like AWS Cost Explorer and Trusted Advisor provided insights into:

- Idle or underutilized EC2 instances.

- Unused Elastic IPs and outdated snapshots.

- Unmanaged EFS file system and storage tiers

Step 2: Right-Sizing and Auto-Scaling

We recommended:

- Right-Sizing: Adjusting EC2 instance types to match workloads, particularly for AI training jobs.

- Auto-Scaling Groups: Implementing dynamic scaling for instances based on demand to prevent over-provisioning.

- Lifecycle management: Applying EFS data transition to automatically move data between different tiers based on access.

Step 3: Spot Instances and Savings Plans

To reduce costs for non-critical workloads, we:

- Introduced Spot Instances for AI model training, achieving up to 70% savings.

- Enrolled in Savings Plans for unpredictable workloads type, locking in discounted rates for compute services.

Step 4: Optimizing Storage

- Transitioned infrequently accessed datasets to S3 Glacier for long-term archival.

- Enabled S3 Intelligent-Tiering to automatically move data between storage classes based on usage patterns.

Step 5: Monitoring and Governance

We implemented FinOps practices by:

- Setting up cost anomaly detection using AWS Budgets and Cost Anomaly Detection.

- Establishing governance policies to prevent resource sprawl, including tag-based resource tracking.

Outcome

Within two months, the client achieved:

- 30% Reduction in Monthly Costs: Through right-sizing, auto-scaling, and spot instances.

- Improved Resource Utilization: Idle and underutilized resources were terminated.

- Enhanced Cost Visibility: Dashboards provided clear insights into spending trends and forecasts.

- Sustained Savings: Policies ensured ongoing cost optimization without manual interference.

More Tips